Maggie CollierI am a researcher with multidisciplinary experience in robotics, assistive technology, and biomedical device development. My current research interests include Human Robot Interaction, Assistive Robotics, and Disability Studies. Please excuse this messy website. It's currently under construction. I am currently pursuing my Ph.D. in Robotics at Carnegie Mellon University (CMU) where I conduct assistive robotics research in the Human and Robot Partners Lab (HARP Lab) . In the spring of 2019, I was awarded the National Defense Science and Engineering Graduate Fellowship to fund my graduate education. In 2019, I earned two bachelor of science degrees (Electrical Engineering and Biomedical Engineering) from the University of Alabama at Birmingham (UAB). During my time at UAB, I conducted three years of tissue engineering research and participated in two robotics summer research programs: Georgia Tech's 2017 SURE Robotics program (in Prof. Charlie Kemp's lab) and Carnegie Mellon's 2018 Robotics Institute Summer Scholars program (in Prof. Henny Admoni's lab (HARP Lab). Additionally, I was named a Goldwater Scholar in 2017. Email / GitHub / Google Scholar / LinkedIn / CV |

|

ResearchI'm interested in Human Robot Interaction, Assistive Robotics, and Disability Studies. |

|

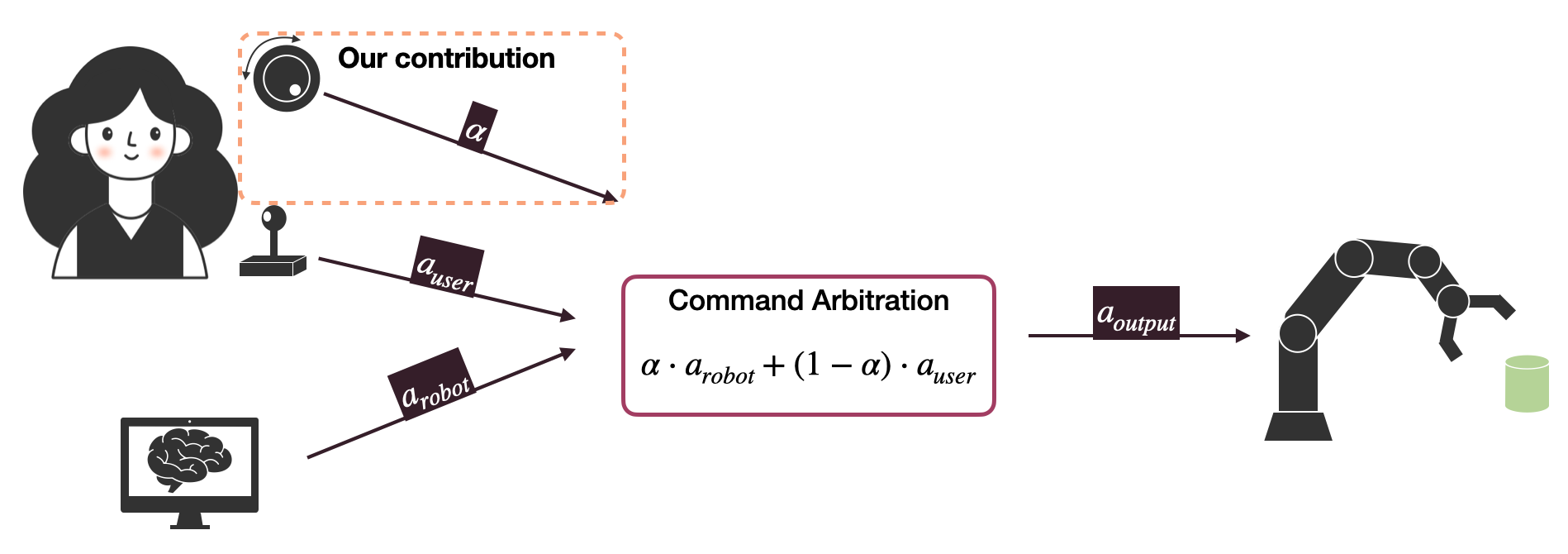

Uncovering People`s Preferences for Robot Autonomy in Assistive TeleoperationHuman and Robot Partners Lab, Carnegie Mellon University 2021 paper poster / ABSTRACT: What factors influence people’s preferences for robot assistance? Answering this question can help roboticists formalize assistance that leads to higher user satisfaction and increased user acceptance of assistive technology. Often in assistive robotics literature, we see paradigms that aim to optimize task success metrics or measures of users’ perceived task complexity and cognitive load. However, frequently in this literature, participants express a preference for paradigms that do not perform optimally with respect to these metrics. Therefore, task success and cognitive load metrics alone do not encapsulate all of the factors that inform users’ needs or desires for robotic assistance. We focus on a subset of assistance paradigms for manipulation called assistive teleoperation in which the system combines control signals from the user and the automated assistance. In this work, we aim to study potential factors that influence users’ preferences for assistance during object manipulation tasks. We design a study to evaluate two factors (magnitude of end effector movement and the degrees of freedom being controlled) that may influence the amount of automated assistance the user wants. |

|

Eye Gaze Behavior in Teleoperation of a Robot in a Multi-stage TaskHuman and Robot Partners Lab, Carnegie Mellon University 2020 paper poster / ABSTRACT: Individuals with motor disabilities can use assistive robotics to independently perform activities of daily living. Many of these activities, such as food preparation and dressing, are complex and represent numerous subtasks which can often be performed in a variety of sequences to successfully achieve a person’s overall goal. Because different kinds of tasks require different types or levels of assistance, the variety of subtask sequences which the user can choose makes robotic assistance challenging to implement in a multi-stage task. However, a system that can anticipate the user’s next intended subtask can optimize the assistance provided during a multi-stage task. Psychology research indicates that non-verbal communication, such as eye gaze, can provide clues about people’s strategies and goals while they manipulate objects. In this work, we investigate the use of eye gaze for user goal anticipation during the telemanipulation of a multi-stage task. |

|

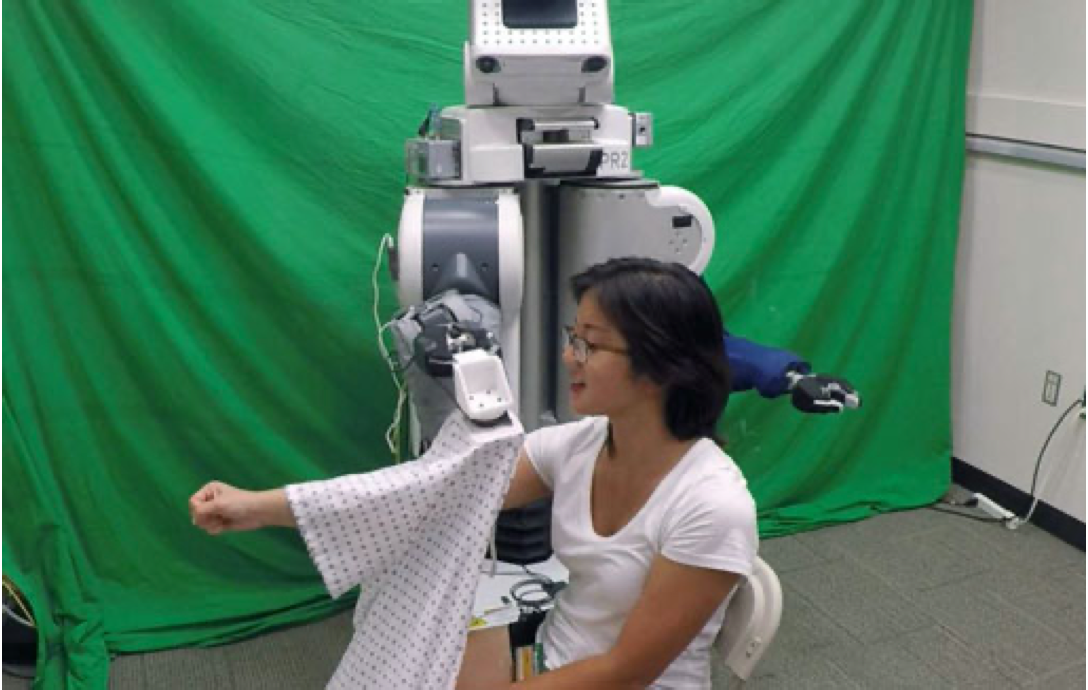

Capacitive Sensing in Robot-Assisted DressingHealthcare Robotics Lab, Georgia Tech 2017 paper ABSTRACT: Dressing is a fundamental task of everyday living and robots offer an opportunity to assist older adults and people with motor impairments who need assistance with dressing. While several robotic systems have explored robot-assisted dressing, few have considered how a robot can manage error due to human pose estimation or adapt to human motion during dressing assistance. In addition, estimating pose changes due to human motion can be challenging with vision-based techniques since dressing is intended to visually occlude the body with clothing. We present a method that uses proximity sensing to enable a robot to account for errors in the estimated pose of a person and adapt to the person’s motion during dressing assistance. |

|

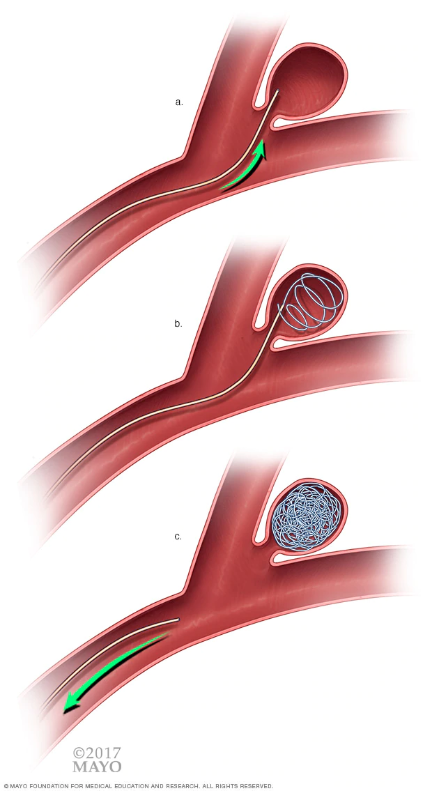

Improving Coil Embolization of Brain AneurysmsBiomedical Engineering Dept., UAB 2017 ABSTRACT: Brain aneurysms represent a health risk that must be treated as quickly and efficiently as possible to prevent fatality and the brain damage often associated with hemorrhage. Surgical clipping and coil embolization are the two most effective treatments for ruptured brain aneurysms; yet, both are problematic. While surgical clipping is disadvantageous due to the extreme invasiveness of the craniotomy it requires, coiling carries a higher risk of rebleeding (aneurysmal rerupture) than clipping, an unfortunate outcome with a mortality rate of 70%. This higher rate of rebleeding is thought to be the result of poor aneurysmal healing in the months following initial coil placement. This project aimed to decrease rates of rebleeding in brain aneurysms treated with coiling by enhancing this healing process. The lab previously demonstrated a self-assembling nanomatrix coating that provides an endothelium-mimicking microenvironment on the surface of cardiovascular devices. This kind of environment, we hypothesized, might encourage proper aneurysmal healing. Thus, we applied the coating to the surface of the coils in attempt to reduce the risk of rebleeding associated with coil embolization. |

Other ProjectsThese include coursework, side projects and unpublished research work. |

|

Assistive Applications, Accessibility, and Disability Ethics (A3DE) Workshop at HRI 2024organizing 2024-03-01 paper / Description: This full-day workshop addresses the problems of accessibility in HRI and the interplay of ethical considerations for disability-centered design and research, accessibility concerns for disabled researchers, and the design of assistive HRI technologies. The workshop will include keynote speakers from academia and industry, a discussion with expert academic, industry, and disability advocate panelists, and activities and breakout sessions designed to facilitate conversations about the accessibility of the HRI community, assistive technology researchers, and research ethics from all areas of HRI (technical, social, psychological, design, etc.) We will explore guidelines around research ethics in this area of HRI, as well as new directions for this area of research. |

|

EE Capstone Project: Autonomous Robot for Hardware Competitioncapstone 2019-04-01 Description: For EE Senior capstone, my team and I built an autonomous robot for the IEEE SouthEast Student Competition. I worked on the localization component which can be seen in the project thumbnail. |

|

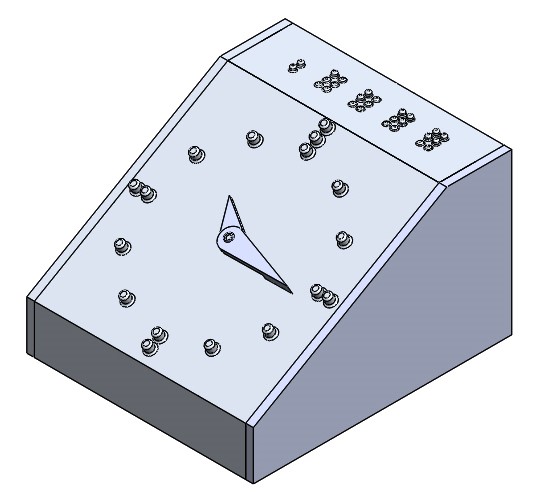

BME Capstone Project: Alarm Clock for People with Deaf-Blindnesscapstone 2017-04-01 Description: A request from the Alabama Department of Rehabilitation Services led us to discover the need for an inexpensive method to provide a timekeeping and alarm device that can be set by people with deaf-blindness without assistance from a caretaker, thereby increasing the users’ independence. Based on price and quality gaps between existing solutions and the desired device, we developed an alarm clock that features an analog clock face to be read by tactile stimulation, an electrical interface that matches those found in standard bed vibrators already commonly used in the deaf community, and a “reverse braille” push button mechanism to set the original time as well as the alarm. The reverse braille input mechanism is novel in itself, due to its relatively minimalist direction which contrasts modern designs which have become increasingly complex. In addition to its usage in our cost-effective alarm clock, the reverse braille input mechanism can be applied to a wide variety of independent niche uses for individuals with deaf-blindness, which could increase their independence drastically. |

|

Design and source code from Jon Barron's website |